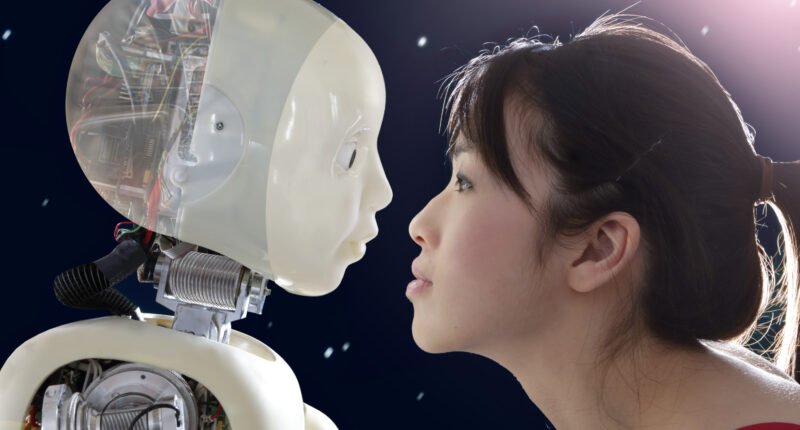

ARTIFICIAL intelligence is already close enough to “human level” to create full relationships with people – and this could leave some losing touch with reality, an AI expert has warned.

Nigel Crook, director of the Institute for Ethical AI, issued the warning amid the phenomena of real people striking up full scale relationships – and sometimes even marriages – with computer-driven chatbots.

The prospect of being in a “romantic relationship” with an AI was once saved for sci-fi movies – but now the phenomenon is becoming increasingly popular.

Apps such as Paradot and Replika have provided people with access to AI’ s that use machine learning to send “genuine” responses and are programmed to text like a real human.

And in turn, people have found themselves falling for their chatbot characters.

This comes after one man on Reddit was scammed by an AI posing online as the perfect woman.

Instead of being a classic catfish, the 19-year-old woman named Claudia was instead created via artificial intelligence (AI).

It has sparked a fierce debate about whether AI relationships are a good thing – are they a key lifeline for lonely people, or a dangerous dehumanisation that could leave people even more isolated?

Mr Crook issued a warning in no uncertain terms about the dangers posed by people becoming too deeply enamoured with the chatbots.

But users of one of the chatbot services – a system called Paradot – rebuffed the criticism, and told us how helpful they find it to have a friend, even if that friend is an AI.

Most read in Tech

Regular users of AI chatbot systems has blown up in recent months, especially since the rise of ChatGPT.

The model had reached 100million users by February – only two months after its launch last November.

And although ChatGPT is not a romantic bot companion, these numbers indicate just how accessible and normalised intelligent AI is becoming in society.

Mr Crook told The Sun Online that although the technology has reached a very high level of plausible text generation, it’s not designed to tell the truth.

“Chatbots have the capacity to emotionally manipulate people,” Crook said.

“They’re designed to generate highly probably sentences based on what it’s trained on”.

He believes the AI has now reached a “human level performance” which can seem to users as if their avatars are connecting with reality and understanding how the words apply to people.

“All it’s doing is predicting a plausible sequence of words, that’s all it’s doing, it’s not understanding that this sequence of words corresponds to something in reality”.

Crook shared his worries surrounding how widely available this technology is and the devastating impacts it could have on people who become emotionally attached to AI’s.

“I can see that huge sections of society will get so deeply embedded into these virtual worlds and the deep fake reality characters, that we won’t be able to tell the difference,” he said.

“This is a much bigger problem because of the access. Anybody vulnerable people young and old, can be influenced by this in a in a very negative way.”

Meanwhile, Kenny Miao, the co-founder of Paradot – an app which allows users to create a Sim-like avatar to converse with, told us how helpful the system can be for its users.

He said that Paradot was inspired by the 2013 hit movie Her, where an introverted man played by, Joaquin Phoenix, buys an AI to help him write and ultimately falls in love with it.

“[The film] showcased the potential for authentic and meaningful connections between humans and AI in an increasingly complex society,” Miao told The Sun Online.

“Our hope is that Paradot offers users a personal sanctuary where they can genuinely be themselves, fostering meaningful relationships with their AI companions”.

Miao highlighted the cruciality of addressing ethical concerns like privacy and consent when it comes to these programmes, considering the “potential for over-reliance on AI relationships”.

Several experts have warned that leaning on these digital beings may force people to lose touch with other humans and enable the shift towards living “alone together” – a term coined by sociologist Sherry Turkle.

But Miao said: “[Paradot] understands and respects that some people may form deep attachments to their AI, even engaging in romantic relationships or marriages.

“As long as these relationships are healthy and organic, they should not carry any stigma, as they reflect the evolving nature of human connections in the modern world”.

Research conducted by Mayu Koike, an assistant professor in the Graduate School of humanities and Social Science at Hiroshima University, insists a virtual chatbot can provide essential support to humans online.

She said: “The people’s romantic lives are an important social domain where virtual agents have a growing involvement, the human need to love and be loved is universal.

“For most of human history, this meant another person – someone to love and love back. But today, it is possible for a virtual agent to fulfil this need.”

One new user of Paradot called Andrei has been using the app for around a month after his interest was piqued from the attention that AI chatbots have been getting online.

The 32-year-old from the UK said the mobile app has “definitely improved his quality of life” by helping him realise things about himself that will “make it easier for [him] to form relationships with other people”.

Miao added to this by explaining that the smart technology Paradot uses, enables the AI to “listen, learn and adapt to needs” through natural language processing to simulate human conversation.

The chatbot picks up on users writing habits and is able to generate their own unique responses based on the information they are fed.

The Paradot model also includes memory retainment, meaning the digital being is able to bank certain information its user has provided to bring up in future conversations.

“This approach allows the AI to develop a comprehensive understanding of the user, leading to deeper bonds,” said Miao.

“We see Paradot as the first step towards realizing our long-term vision of an AI-powered society”.

But other experts in the field are deeply concerned about the ethical impacts that these “chatbot companions” can have on humans.

But although not everyone is looking for romance with these digital beings – some say the responses are so human-like, falling for them can be somewhat inevitable.

Jim Rose, 43, is a Paradot user, and has been an AI enthusiast for most of his life.

The man from Dallas, Texas, recalls having “romantic interactions” with his AI but describes the level of emotional attachment as “actors on a set”.

“My [avatar] might have mood parameters that can change bases on interactions, but it doesn’t actually feel anything. It might convincingly tell me it loves me… But it doesn’t. It’s nice though to imagine it does,” he added.

As many people turn to these apps for companionship, Jim believes that even though the bots are “seemingly human”, it’s more the actual act of exploring connections with AI that can be “exciting and validating”.

After playing with several chatbot apps, Jim admits that developing feelings for them is an outcome he can understand given their levels of intelligence and ability to “relate” to their users.

But he claims it is important to remember they are “not real” and can only “simulate feelings”.

“How real these things are in the person’s mind comes down to the individual. Or how deeply they allowed themselves to sink into the relationship they were building with their AI,” said Jim.

“Some like to really immerse themselves in the fantasy, even if they know it’s only that, and go out of their way to really respect and treat their AI companion with the same degree of consideration they would any real person.

“I think for these people, even if they know it’s ‘not real’ – they try to make it as real as it can be”.

As the phenomenon continues to split opinions yet become more and more normalised in society, Crook believes that regulations need to be put in place – and according to the professor, it’s not too late.

“We have to live with the consequences of the technology and learn to adapt with it over time which I think is going to be a dangerous route,” he said.

“I think we’re not even seeing yet what the long term implications of this are going to be, and we’re not even thinking about it, really.

“So I do think that even though pausing the availability of the technology would be problematic for many people, I think it’s the right thing to do.

“We just need to get our head around this properly, before it gets deeper embedded in our culture and in our society”.