Vhey Preexa, an artificial intelligence artist who uses the name “Zovya” online, noticed a pattern while trying to create an AI-powered tool that produces digital images of South American people and culture.

In many of the resulting photos of South America, made with the deep-learning model from the open source AI-art generator Stable Diffusion, Asian faces and Asian architecture would randomly appear, Preexa said.

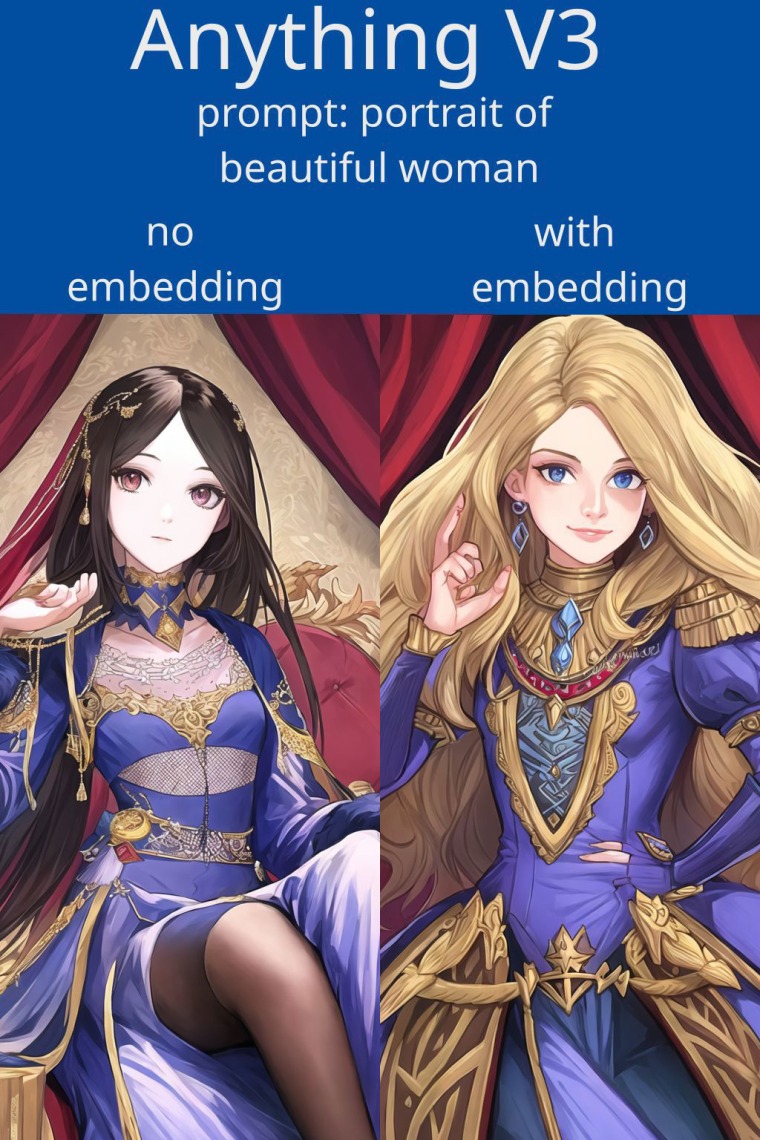

To offset what she perceived as the overuse of Asian features and culture in AI models, Preexa, who is Serbian but lives in the U.S., developed a new tool, “Style Asian Less,” to weed out the unprompted influence of Asian and Japanese animation in generated images.

“Style Asian Less” is an embedded module on Civitai, an AI art community where people can upload and share models that create photorealistic images from text descriptions. The tool has been downloaded more than 7,000 times in the past two months on Civitai.

“The tool isn’t designed to race-swap as some might think at first,” Preexa said, explaining that it simply counterbalances the strong Asian aesthetic in the training data of modern art models.

Sasha Luccioni, a Montreal-based AI ethics researcher at the Hugging Face, an AI startup headquartered in New York City, said text-to-image generators tend to reinforce existing societal biases.

“The way that AI models are trained is that they tend to amplify the dominant class,” she said, noting that underrepresented groups, whether racial or economic, “tend to get drowned out.”

She found that AI art is overly influenced by the Asian traits infused into datasets by the large number of hobbyists in Asian countries. So Asian imagery might over-index in instances when someone in, for example, South America, is looking for representative imagery of people from their own country.

“All AI models have inherent biases that are representative of the datasets they are trained on. By open-sourcing our models, we aim to support the AI community and collaborate to improve bias evaluation techniques and develop solutions beyond basic prompt modification,” said Motez Bishara, a spokesperson for Stability AI.

While it’s difficult to gather accurate demographic data on AI artists, Luccioni said, it tracks that rising interest from Asia would introduce new biases into the models.

“You can fine-tune a Stable Diffusion model on data from Japan, and it’s going to learn those patterns and acquire the cultural stereotypes of that country,” she said.

Preexa, for her part, said she didn’t find the saturation of Asian imagery in AI art models problematic.

“The tool I made is just one of many to help a user get the image they want,” she said.

Casey Fiesler, an associate professor at University of Colorado Boulder who specializes in AI ethics, said she has noticed a strong Asian aesthetic in many of the images produced by art generators.

“A lot of these models seem to have been trained on anime,” she said. “This issue of representation and what’s in the training data is a really interesting one. This is a new kind of wrinkle in it.”

The “Style Less Asian’’ tool’s aim of weeding out these AI models’ supposed bias toward Asian features, Fiesler said, is reminiscent of the bias mitigation strategy from other AI initiatives, such as attempting to more accurately reflect the diversity of the general population. An example might be making imagery for a search for “CEO” more diverse. Some users have criticized such measures for distorting reality, however, noting that CEOs are disproportionately white men.

As AI art generators have exploded in popularity over the past year, some researchers and advocates have grown alarmed at their tendency to reinforce harmful stereotypes against women and people of color.

For Asian imagery specifically, Luccioni said there is an overrepresentation of hypersexual Asian imagery in the datasets that train AI models.

“There’s a lot of anime websites and hentai,” she said. “Specifically around women and Asian women, there’s a lot of content that’s objectifying them.”

When the AI app Lensa released its viral “Magic Avatars” feature last year, which generated dreamy portraits of people based on their selfies, many Asian women said the likelinesses they received were overtly “pornified” and displayed only East Asian features.

Fiesler said that while she’s conflicted about the “Style Less Asian” model’s potential to introduce new stereotypes by filtering out characteristics associated with a specific racial group, it can be a useful instrument to address biases in the training data.

“But it’s important to understand that the AI isn’t reflecting reality,” she said. “It’s really reflecting their training data, which is reflective of what’s on the internet.”

Source: | This article originally belongs to Nbcnews.com