Eighteen months after Facebook banned communities and users connected with the “Boogaloo” anti-government movement, the group’s extremist ideas were back and flourishing on the social media platform, new research found.

The paper, from George Washington University and Jigsaw, a unit inside Google that explores threats to open societies — including hate and toxicity, violent extremism and censorship — found that after Facebook’s June 2020 ban of the Boogaloo militia movement, the content “boomeranged,” first declining and then bouncing back to nearly its original volume.

“What this study says is, you can’t play whack-a-mole once and walk away,” said Beth Goldberg, Jigsaw’s head of research and development. “You need sustained content moderation — adaptive, sophisticated, content moderation, because these groups are adaptive and sophisticated.”

The research, which hasn’t been peer-reviewed, comes at a moment when many tech companies, including Facebook, are slashing their trust and safety departments, and content moderation efforts are being vilified and abandoned.

Teams within Facebook and outside workers contracted by Facebook monitor the platform for users and content that violate its policies against what the company calls Dangerous Organizations and Individuals. Facebook designated the Boogaloo movement “a violent US-based anti-government network” under that policy in 2020.

Meta, Facebook’s parent company, said in a statement that the new study is a “snapshot from one moment in time two years ago — we know this is an adversarial space that’s constantly changing, where perpetrators constantly try to find new ways around our policies. It is our priority to keep our platforms, and communities, safe. That’s why we keep investing heavily in people and technology to stay ahead of the evolving landscape, and then study and refine those tactics to ensure they’re effective at keeping our users and platforms safe.”

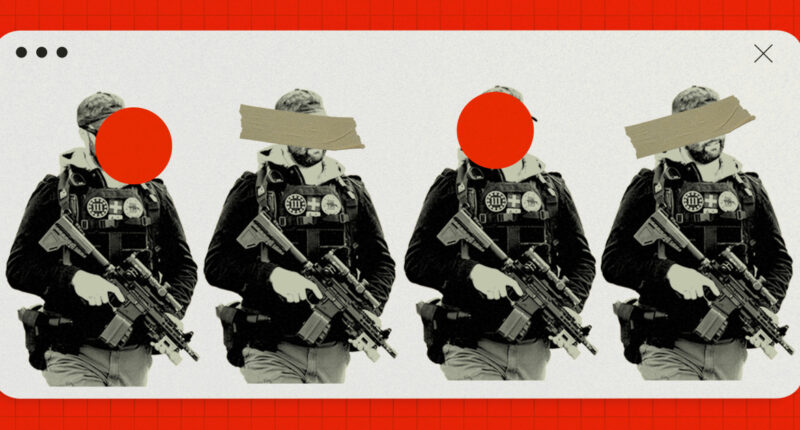

Members of the loose-knit Boogaloo movement were known for showing up to anti-lockdown demonstrations and George Floyd protests in 2020, carrying assault rifles and wearing body armor and Hawaiian shirts. They called for a second civil war, and some had even been accused of a number of violent crimes, including murder.

In its announcement of the ban back in the summer of 2020, Facebook said members of the Boogaloo militia movement were using the social media platform to organize and recruit. Facebook also acknowledged that the removal of hundreds of users, pages and groups was only a first step, as the company expected the group to try to return.

“So long as violent movements operate in the physical world, they will seek to exploit digital platforms,” Facebook’s blog post read. “We are stepping up our efforts against this network and know there is still more to do.”

Facebook was right, according to the new research from George Washington University and Jigsaw.

The researchers’ analysis used an algorithm that identified content from right-wing extremist movements, including Boogaloo, QAnon, white nationalists, and patriot and militia groups. The data used to train the algorithm included 12 million posts and comments from nearly 1,500 communities across eight online platforms, including Facebook, from June 2019 through December 2021.

The algorithm was trained to recognize text in posts from these extremist movements and identify reemerging Boogaloo content, not through identical keywords or images, but through similarities in rhetoric and style. Once the algorithm identified these posts, researchers confirmed the content was Boogaloo-related, finding explicitly Boogaloo aesthetics and memes that included Hawaiian shirts and red laser eyes.

“The coded language that they were using had totally evolved,” Goldberg said, noting that the movement appeared to discard terms like “boog” and “big igloo.” “This was a very intentional adaptation to evade the removals,” she said.

By their analysis, the initial Boogaloo ban worked, largely reducing the militia movement’s content, but 18 months after the ban, in late 2021, the quantity of Boogaloo-related content on public Facebook groups — including discussions of custom gun modifications and preparations for a civil war — had nearly returned to its pre-ban level.

Research published by Meta last week appears to support Jigsaw’s conclusions about the effectiveness of initial deplatforming: the removal of hate groups and their leaders did hamper the online operations of the groups.

However, in a blog post on the research published Friday Jigsaw noted that an increasing interconnectedness between mainstream and alternative platforms — including Gab, Telegram and 4Chan, which may lack the will or resources to remove hateful and violent extremist content — make curbing this content a more complicated problem than ever.

Adept at circumventing moderation enforcement, Boogaloo extremists were able to evade moderators by migrating across (and ultimately back from) alternative platforms.

The new study builds on a small body of academic research, which generally shows that when extremists are banned from mainstream platforms, their reach diminishes. The research also suggests a push to alternative platforms can have the unintended effect of radicalizing followers who move to smaller, unmoderated or private online spaces.

Jigsaw also posted results of a recent qualitative study in which researchers interviewed people who had had posts or accounts removed by mainstream social media platforms as a result of enforcement actions. They reported turning to alternative platforms and being exposed to more extreme content, some of which, in another boomerang effect, they posted back on mainstream platforms. Jigsaw reported that almost all the people interviewed ultimately returned to mainstream platforms.

“People wanted to be online where the public debates were unfolding,” Jigsaw researchers wrote.

Bans work initially, but they have to be enforced, said Marc-André Argentino, a senior research fellow at the Accelerationism Research Consortium, who studies extremist movements online and was not involved in the Jigsaw paper.

Of Meta, Argentino said, “They’ve basically put a Band-Aid on a gunshot wound. They’ve stopped the bleeding, but not dealt with the wound.”

Tech platforms’ efforts to keep online spaces safe are overly reliant on algorithmic devices and tools, Argentino said.

“They want to automate the process and that is not a sophisticated way to take down human beings who are actively working to come back on the platform to get their messages heard,” he said.

Meta has reportedly ended contracts or laid off hundreds of workers tasked with content moderation and trust and safety positions, according to documents filed with the U.S. Department of Labor. The recent cuts echo the guttings at other tech companies of teams that monitor hate speech and misinformation.

“They’re cutting teams, and there’s no resources to actually deal with the multitude of threats,” Argentino said. “Until there is a way to keep the platforms accountable, and they have to play ball in a meaningful way. It’s just gonna be these patchwork solutions.”

Source: | This article originally belongs to Nbcnews.com