The tactics described in the post align with child sex abuse rings on Discord that have been busted by U.S. federal authorities over the last several years. Prosecutors have described rings with organized roles, including “hunters” who located young girls and invited them into a Discord server, “talkers” who were responsible for chatting with the girls and enticing them, and “loopers” who streamed previously recorded sexual content and posed as minors to encourage the real children to engage in sexual activity

Shehan said his organization frequently gets reports from other tech platforms mentioning users and traffic from Discord, which he says is a sign that the platform has become a hub for illicit activity.

Redgrave said, “My heart goes out to the families who have been impacted by these kidnappings,” and added that oftentimes grooming occurs across numerous platforms.

Redgrave said Discord is working with Thorn, a well-known developer of anti-child-exploitation technology, on models that can potentially detect grooming behavior. A Thorn representative described the project as potentially helping “any platform with an upload button to detect, review, and report CSAM and other forms of child sexual abuse on the internet.” Currently, platforms are effectively able to detect images and videos already identified as CSAM, but they struggle with detecting new content or long-term grooming behaviors.

Despite the problem having existed for years, NBC News, in collaboration with researcher Matt Richardson of the U.S.-based nonprofit Anti-Human Trafficking Intelligence Initiative, was easily able to locate existing servers that showed clear signs of being used for child exploitation.

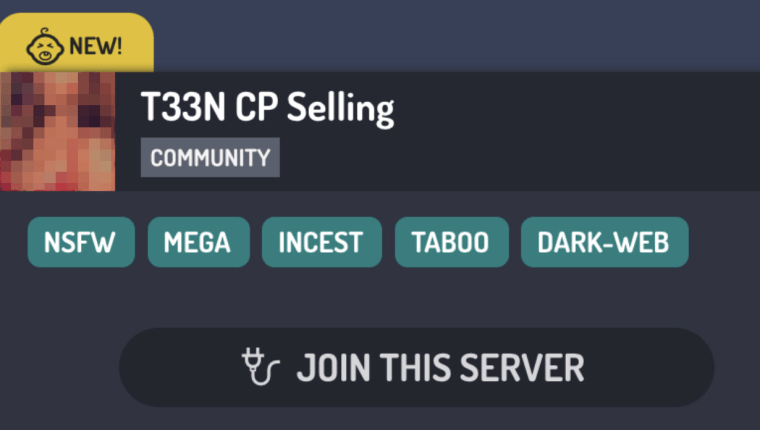

On websites that are dedicated to listing Discord servers, people promoted their servers using variations of words with the initials “CP,” short for child pornography, like “cheese-pizza” and “casual politics.” The descriptions of the groups were often more explicit, advertising the sale of “t33n” or teenage content. Discord does not run these websites.

In response to NBC News’ questions about the Discord servers, Redgrave said the company was trying to educate the third-party websites that promote them about measures they could take that could protect children.

In several servers, groups explicitly solicited minors to join “not safe for work” communities. One server promoted itself on a public server database, saying: “This is a community for people between the ages of 13-17. We trade nudes, we do events. Join us for the best Teen-NSFW experience 3.”

The groups appear to fit into the description of child sex abuse material production groups described by prosecutors, where adults pretend to be teens to entice real children into sharing nude images.

Inside one of the groups, users directly solicited nude images from minors to gain access. “YOU NEED TO SEND A VERIFICATION PHOTO TO THE OWNER! IT HAS TO BE NUDE,” one user wrote in the group. “WE ACCEPT ONLY BETWEEN 12 AND 17 YEARS OLD.”

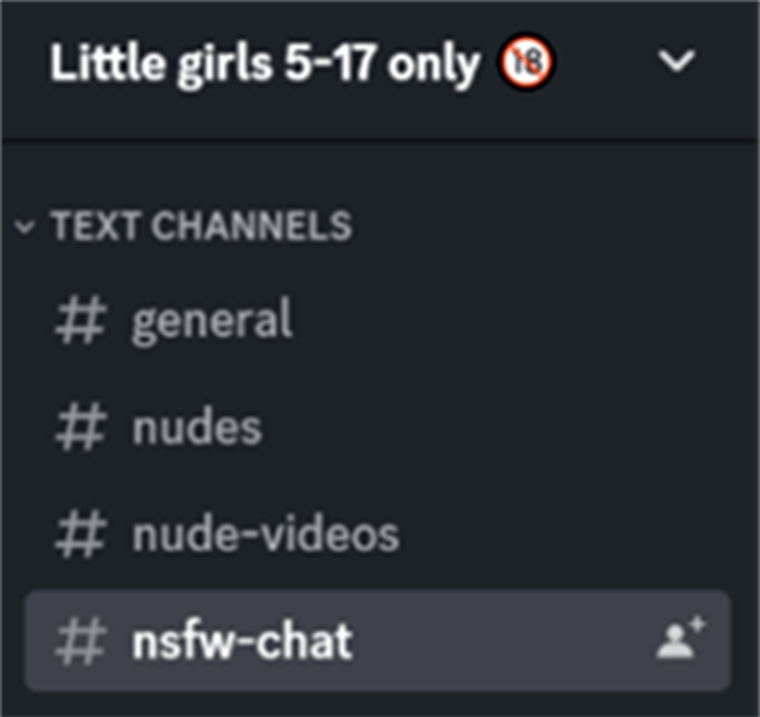

In another group, which specifically claimed to accept “little girls 5-17 only,” chat channels included the titles “begging-to-have-sex-chat,” “sexting-chat-with-the-server-owner,” “nude-videos,” and “nudes.”

Richardson said that he is working with law enforcement to investigate one group on Discord with hundreds of members who openly celebrate the extortion of children whom they say they’ve tricked into sharing sexual images, including images of self-harm and videos they say they’ve made children record.

NBC News did not enter the channels, and child sexual abuse material was not viewed in the reporting of this article.

Discord markets itself to kids and teens, advertising its functionality for school clubs on their homepage. But many of the other users on the platform are adults. The two age groups are allowed to freely mix. Some other platforms, such as Instagram, have instituted restrictions on how people under 18 can interact with people over 18.

The platform allows anyone to make an account and, like some other platforms, only asks for an email address and a birthday. Discord’s policies say U.S. users cannot join unless they’re at least 13 years old, but has no system to verify a user’s self-reported age. Age verification has become a hot-button issue in the world of social media policy, as legislation on the topic is being considered around the U.S. Some major platforms such as Meta have attempted to institute their own methods of age-verification, and Discord is not the only platform that has yet to institute an age-verification system.

Redgrave said the company is “very actively investigating, and will invest in, age assurance technologies.”

Children under 13 are frequently able to make accounts on the app, according to the court filings reviewed by NBC News.

Despite the closed-off nature of the platform and the milieu of ages mixing on it, the platform has been transparent about its lack of oversight of the activities that occur on it.

“We do not monitor every server or every conversation,” the platform said on a page in its safety center. Instead, the platform says it mostly waits for community members to flag issues. “When we are alerted to an issue, we investigate the behavior at issue and take action.”

Redgrave said the company is testing out models that analyze server and user metadata in an effort to detect child safety threats.

Redgrave said the company plans to debut new models that will try to locate undiscovered trends in child exploitation content later this year.

Despite Discord’s efforts to address child exploitation and CSAM on its platform, numerous watchdogs and officials said more could be done.

Denton Howard, executive director of Inhope, an organization of missing and exploited children hotlines from around the world, said that Discord’s issues stem from a lack of foresight, similar to other companies.

“Safety by design should be included from Day One, not from Day 100,” he said.

Howard said Discord had approached Inhope and proposed being a donating partner to the organization. After a risk assessment and consultation with the members of the group, Inhope declined the offer and pointed out areas of improvement for Discord, which included slow response times to reports, communications issues when hotlines tried to reach out, hotlines receiving account warnings when they try to report CSAM, the continued hosting of communities that trade and create CSAM, and evidence disappearing before hearing back from Discord.

In an email from Discord to Inhope viewed by NBC News, Discord the company said it was updating its policies around child safety and are working to implement “THORN’s grooming classifier.”

Howard said Discord had made progress since that March exchange, reinstituting the program that prioritizes reports from hotlines, but that Inhope had still not taken the company’s donation.

Redgrave said that he believes Inhope’s recommended improvements aligned with changes the company had long hoped to eventually implement.

Shehan noted that NCMEC had also had difficulties working with Discord, saying that the organization rescinded an invitation to Discord to become part of its Cybertipline Roundtable discussion, which includes law enforcement and top tipline reporters after the company failed to “identify a senior child safety representative to attend.”

“It was really questionable, their commitment to what they’re doing on the child exploitation front,” Shehan said.

Detective Staff Sgt. Tim Brown of Canada’s Ontario Provincial Police said that he’d similarly been met with a vexing response from the platform recently that made him question the company’s practices around child safety. In April, Brown said Discord asked his department for payment after he had asked the company to preserve records related to a suspected child sex abuse content ring.

Brown said that his department had identified a Discord server with 120 participants that was sharing child sexual abuse material. When police asked Discord to preserve the records for their investigation, Discord suggested that it would cost an unspecified amount of money.

In an email from Discord to the Ontario police viewed by NBC News, Discord’s legal team wrote: “The number of accounts you’ve requested on your preservation is too voluminous to be readily accessible to Discord. It would be overly burdensome to search for or retrieve data that might exist. If you pursue having Discord search for and produce this information, first we will need to discuss being reimbursed for the costs associated with the search and production of over 20 identifiers.”

Brown said he had never seen another social media company ask to be reimbursed for maintaining potential evidence before.

Brown said that his department eventually submitted individual requests for each identifier, which were accepted by Discord without payment, but that the process took time away from other parts of the investigation.

“That certainly put a roadblock up,” he said.

Redgrave said that the company routinely asks law enforcement to narrow the scope of information requests but that the request for payment was an error: “This is not part of our response process.”

Source: | This article originally belongs to Nbcnews.com