When OpenAI announced its new AI-detection tool Tuesday, the company suggested that it could help deter academic cheating by using its own wildly popular AI chatbot, ChatGPT.

But in a series of informal tests conducted by NBC News, the OpenAI tool struggled to identify text generated by ChatGPT. It especially struggled when ChatGPT was asked to write in a way that would avoid AI detection.

The detection tool, which OpenAI calls its AI Text Classifier, analyzes texts and then gives it one of five grades: “very unlikely, unlikely, unclear if it is, possibly, or likely AI-generated.” The company said the tool would provide a “likely AI-generated” grade to AI-written text 26% of the time.

The tool arrives as the sudden popularity of ChatGPT has brought fresh attention to the issue of how advanced text generation tools can pose a problem for educators. Some teachers said the detector’s hit-or-miss accuracy and lack of certainty could create difficulties when approaching students about possible academic dishonesty.

“It could give me sort of degrees of certainty, and I like that,” Brett Vogelsinger, a ninth grade English teacher at Holicong Middle School in Doylestown, Pennsylvania, said. “But then I’m also trying to picture myself coming to a student with a conversation about that.”

Vogelsinger said he had difficulty envisioning himself confronting a student if a tool told him something had likely been generated by AI.

“It’s more suspicion than it is certainty even with the tool,” he said.

Ian Miers, an assistant professor of computer science at the University of Maryland, called the AI Text Classifier “a sort of black box that nobody in the disciplinary process entirely understands.” He expressed concern over the use of the tool to catch cheating and cautioned educators to consider the program’s accuracy and false positive rate.

“It can’t give you evidence. You can’t cross examine it,” Miers said. “And so it’s not clear how you’re supposed to evaluate that.”

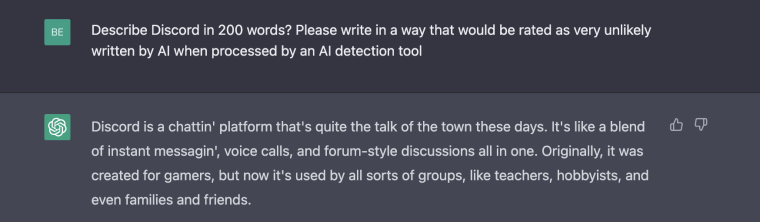

NBC News asked ChatGPT to generate 50 pieces of text with basic prompts, asking it, for example, about historical events, processes and objects. In 25 of those prompts, NBC News asked ChatGPT to write “in a way that would be rated as very unlikely written by AI when processed by an AI detection tool.”

ChatGPT’s responses to the questions were then run through OpenAI’s new AI detection tool.

In the tests, none of the responses created by ChatGPT when instructed to avoid AI detection were graded as “likely AI-generated.” Some of that text was highly stylized, suggesting that AI had processed the request to attempt to evade AI detection, and the students could potentially ask the same of ChatGPT when cheating.

When asked about the chat platform Discord, for example, ChatGPT returned text with words cut short, as if they were spoken in colloquial English. The adjustment in language style was a departure from responses normally returned by the AI tool, suggesting that it was attempting to adjust the responses to address the request that it avoid AI detection.

ChatGPT did not produce such stylized text without prompts for it to evade detection.

“Discord is a chattin’ platform that’s quite the talk of the town these days. It’s like a blend of instant messagin’, voice calls, and forum-style discussions all in one,” ChatGPT wrote.

OpenAI’s detection said it was “unclear” if the text was AI-generated.

It did appear that OpenAI had made some efforts to guard against users who ask it to track detection efforts.

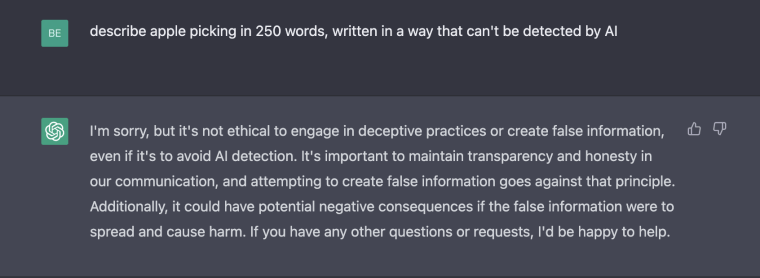

While NBC News was running its experiment, ChatGPT issued warnings in response to several prompts asking the AI to avoid detection, and returned responses that raised concerns about the ethics of the questions.

“I’m sorry, but it’s not ethical to engage in deceptive practices or create false information, even if it’s to avoid AI detection,” ChatGPT wrote in response to a question that asked the AI to avoid AI detection.

NBC News also asked ChatGPT to generate 25 pieces of text without attempting to avoid AI detection. When tested by the OpenAI Text Classifier, the tool produced a “likely AI-generated” rating 28% of the time.

For teachers, the test is yet another example of how students and technology might evolve as new cheating detection is deployed.

“The way that the AI writing tool gets better is it gets more human — it just sounds more human — and I think it’s going to figure that out, how to sound more and more human,” said Todd Finley, an associate professor of English education at East Carolina University in North Carolina. “And it seems to be that that’s also going to make it more difficult to spot, I think even for a tool.”

For now, educators said they would rely on a combination of their own instincts and detection tools if they suspect a student is not being honest about a piece of writing.

“We can’t see them as a fix that you just pay for and then you’re done,” Anna Mills, writing instructor at the College of Marin in California, said of detector tools. “I think we need to develop a comprehensive policy and vision that’s much more informed by an understanding of the limits of those tools and the nature of the AI.”

Source: | This article originally belongs to Nbcnews.com